Building My Bookish Companion: An AI Reading Assistant

Have you ever wanted to read War and Peace but felt overwhelmed by its 1,200+ pages? 📚 (Tolstoy isn't exactly known for brevity.) Reddit communities like r/ayearofwarandpeace tackle this by reading together over a year, providing structure and accountability that makes reading massive books feel manageable.

I built My Bookish Companion to capture that same energy in a conversational AI. It's a reading assistant that helps you work through epic books by creating personalized schedules and keeping you engaged along the way.

See It in Action

How It Works

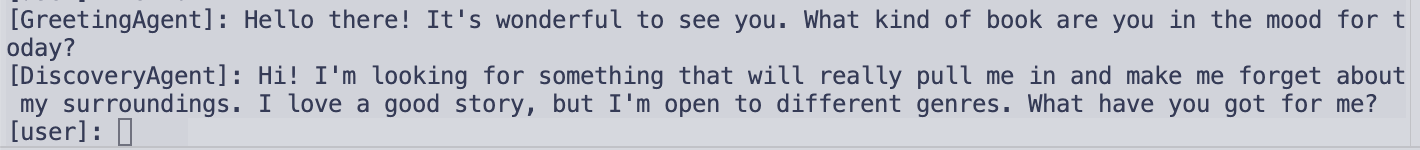

In a nutshell, the experience unfolds in three phases:

Discovery 🔍: You start by chatting with the assistant about what you're interested in reading. Maybe you want a witty science-fiction book, or perhaps you're drawn to 19th-century Russian literature. You don't need to know exactly which book you want. The assistant asks questions, learns your preferences, and suggests a book that matches what you're looking for.

Scheduling 📅: Once you've chosen a book, the assistant asks how much time you can dedicate to reading each day. Based on your answer, it creates a day-by-day reading plan that breaks the book into manageable chunks. This schedule gets saved as a GitHub issue, giving you a persistent place to track your progress.

Engagement 💡: To help you stay motivated, the assistant generates material for your first reading session. This includes a chapter summary, reflection questions, and interesting facts about the author or historical period. This also gets stored in GitHub for easy access.

graph LR

User([User])

Orch["🎯 BookishOrchestrator<br/>(Coordinator)"]

Disc["📚 DiscoveryAgent<br/>Find the perfect book"]

Sched["📅 SchedulingAgent<br/>Create reading plan"]

Eng["✨ EngagementAgent<br/>Generate study material"]

A1["Reading Schedule<br/>Issue"]

A2["Engagement Material<br/>Issue"]

style User fill:#ffffff,stroke:#333333,stroke-width:2px

style Orch fill:#f3e5f5,stroke:#7b1fa2,stroke-width:2px

style Disc fill:#e3f2fd,stroke:#0288d1,stroke-width:2px

style Sched fill:#e8f5e9,stroke:#388e3c,stroke-width:2px

style Eng fill:#fff3e0,stroke:#f57c00,stroke-width:2px

style A1 fill:#ffffff,stroke:#388e3c,stroke-width:1px

style A2 fill:#ffffff,stroke:#f57c00,stroke-width:1px

User <--> Orch

Orch --> Disc

Disc --> Orch

Orch --> Sched

Sched --> A1

Sched --> Orch

Orch --> Eng

Eng --> A2

Eng --> OrchThe Core Question: Predictable or Conversational?

Building this project surfaced a question at the intersection of software engineering and AI design: When should behavior be predictable and when should it be conversational? 🤔

Traditional software follows deterministic logic. Step A always leads to step B, if a certain condition is met. This predictability is comforting.

Conversational AI? Different story. It's probabilistic by nature, generating varied responses that feel more human and adapt to nuance.

The good news is that modern AI frameworks recognize this isn't an either/or choice:

- Google's Agent Development Kit provides different agent types specifically to address this

- LangGraph and LangChain allow you to build structured workflows with conditional edges while maintaining conversational capabilities

- CrewAI offers role-based agents with defined processes

- n8n combines workflow automation with AI agents

The hybrid approach is now standard. The technical challenge though is to know where to draw the line!

- Too much deterministic control and you lose the natural feel of conversation.

- Too much probabilistic behavior and the system becomes unreliable.

If you need creativity or natural language understanding, lean into an LLM. If you need reliability, calculations, or state management, use explicit code.

Where To Draw the Line

The tension between these two approaches became central to building My Bookish Companion: Do I want clear, expected behavior that's always the same? Or do I want to focus on the conversational aspect, imitating how humans actually talk about books and reading habits?

For this project, I had to make these decisions repeatedly.

System Logic: Core facts should be deterministic. When creating a schedule, the agent shouldn't guess what date "tomorrow" is. I built a specific tool (get_today_and_tomorrow) that definitively returns the correct date. The agent uses this hard fact as the foundation for its scheduling, ensuring the plan starts exactly when it should.

Engagement material: The engagement material generation on the other hand should of course be AI-driven. Creating thoughtful reflection questions and identifying interesting historical context requires the kind of reasoning and knowledge synthesis that LLMs excel at.

Discovery conversations and orchestration: This is where the hybrid approach works best. The discovery agent uses AI to have natural, open-ended conversations—asking clarifying questions, understanding preferences, making recommendations. It can have unlimited back-and-forth with the user.

But here's the key: the agent decides when to call the completion tool (after user confirmation), and the orchestrator uses deterministic Python logic to route the workflow. The agent is instructed to only call this tool once it has confirmed the book details with the user.

The conversation feels human and adaptive, but the workflow transitions are reliable and predictable.

The Handoff Problem: When Is an Agent Actually Done?

Building a multi-agent system immediately surfaced a fundamental question: How does the system know when one agent has finished its task and should hand off to the next? 🤔

This might sound simple, but it's surprisingly tricky with AI. With traditional code, you explicitly call the next function when you're done. With conversational AI, you're trying to detect completion from natural language responses.

I went through several failed approaches:

Attempt 1: Magic Keywords 🪄 I tried having agents include special phrases like "BOOK_IDENTIFIED" in their responses. The problem? If the AI made a typo or varied the wording slightly, the handoff would break. Plus, these technical artifacts would show up in the conversation with users.

Attempt 2: Checking for Question Marks ❓ Next, I tried using punctuation as a signal. If the agent's response ended with a question mark, it was still gathering information. No question mark meant it was done. This seemed clever! But it failed when agents used rhetorical questions ("Exciting, right?") in completion messages, or when they waited for input without using a question ("Please provide your reading schedule").

Attempt 3: Tool-Based Completion ✅

The breakthrough came from reframing the problem. Instead of trying to infer completion from conversational patterns, I gave agents an explicit action they could take: calling a mark_task_complete tool.

Think of it like a relay race. The discovery agent can chat with you as long as needed—asking follow-up questions, refining recommendations, potentially having a long back-and-forth. But when you confirm "Yes, let's go with that book," the agent doesn't just tell you it's done—it actively "presses a button" that signals completion and advances the workflow.

This works because AI models are quite reliable at deciding when to use tools based on context. The discovery agent knows it should only press this button after the user explicitly confirms their book choice. The scheduling agent knows it should only press its button after creating the GitHub issue with your reading plan.

The conversation stays natural and flexible, but the workflow transitions are rock-solid deterministic.

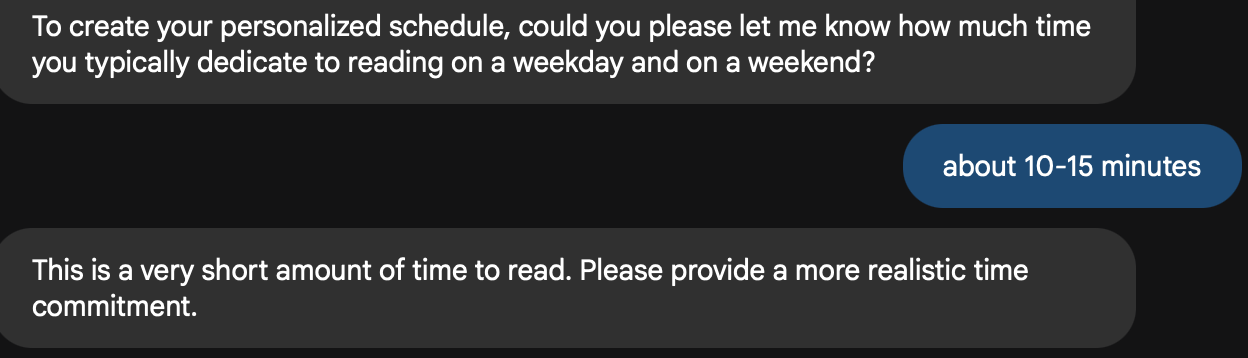

When Agents Get Judgmental

Even with reliable handoffs, the agents themselves weren't perfect. I encountered some amusing problems that don't happen when writing deterministic code.

In the beginning, I had created a "greeter" agent that would welcome users. The discovery agent, which was supposed to take over and help users find books, started responding to the greeter as if it were the user itself. The two agents were essentially having a conversation with each other about book preferences while the actual user watched from the sidelines. 😅

Another issue emerged during scheduling. The agent would sometimes judge users' available reading time. If someone said they could only read for 15 minutes a day, it would suggest that wasn't realistic and they should commit to more time. Apparently, my AI had developed opinions about work-life balance. This was exactly the opposite of the supportive tone I wanted.

How To Fix It

For the greeter problem, I removed the separate greeter agent entirely and had the discovery agent handle greetings as its first task. I also ensured that only one agent is active at any given time through deterministic Python code—the orchestrator strictly controls which agent speaks when, preventing agents from talking to each other.

For the judgmental tone, I added explicit instructions to the scheduling agent's system prompt: "Be supportive. If the user only has a few minutes, respect that." This kind of precise guidance is essential for controlling AI behavior and ensuring agents stay within their intended scope.

Building with Uncertainty

Building with AI agents is fundamentally different from traditional programming. With regular code, you write explicit instructions and get predictable results. Simple. With AI agents, you're managing probabilistic behavior through careful prompting and system design. 🎲 It's less like giving orders and more like managing a very capable but occasionally creative intern.

The agents can do remarkable things. They understand nuanced preferences, generate thoughtful engagement material, and maintain context across long conversations. But they can also go off-script in unexpected ways. The key is finding the right balance between flexibility and control.

What Could Be Next

This project was about learning agent orchestration and workflows and the challenges of building conversational systems. The current version is a minimum viable product.

For production use with real-life users, there are plenty of other features that can be added to make experience even nicer:

- Allow users to provide a list of previously read books (or connect to Goodreads/similar services) to avoid duplicate recommendations and better understand reading preferences

- Support for scheduling constraints like excluding weekends or specific days

- Chapter-based reading schedules: the current schedule uses simple session numbers (Day 1, Day 2) with page ranges. Aligning reading sessions with actual chapter boundaries would make the schedule feel more natural—but that requires fetching chapter metadata from an external API, since the Google Books API doesn't expose this information

- A subscription model that delivers new engagement material as you progress through your reading schedule. 🚀

But the core experience is there. You can have a conversation about what you want to read, get a personalized schedule that fits your life, and receive materials to keep you engaged. For anyone intimidated by a massive classic novel, that's a good starting point, isn't it?

For a more technical explanation and to check out the code, visit my GitHub repo.